A new cloud for a new generation of innovation

Taiga Cloud is Europe’s largest AI cloud service provider, fuelled by the very latest NVIDIA technology solutions. Our infrastructure as a service provides true data sovereignty running on carbon-free energy – via the Taiga Cloud self-service portal or through our API.

Welcome to Taiga!

How can we help you today?

4 types of NVIDIA cloud solutions

1 goal: helping you bring your best ideas to life

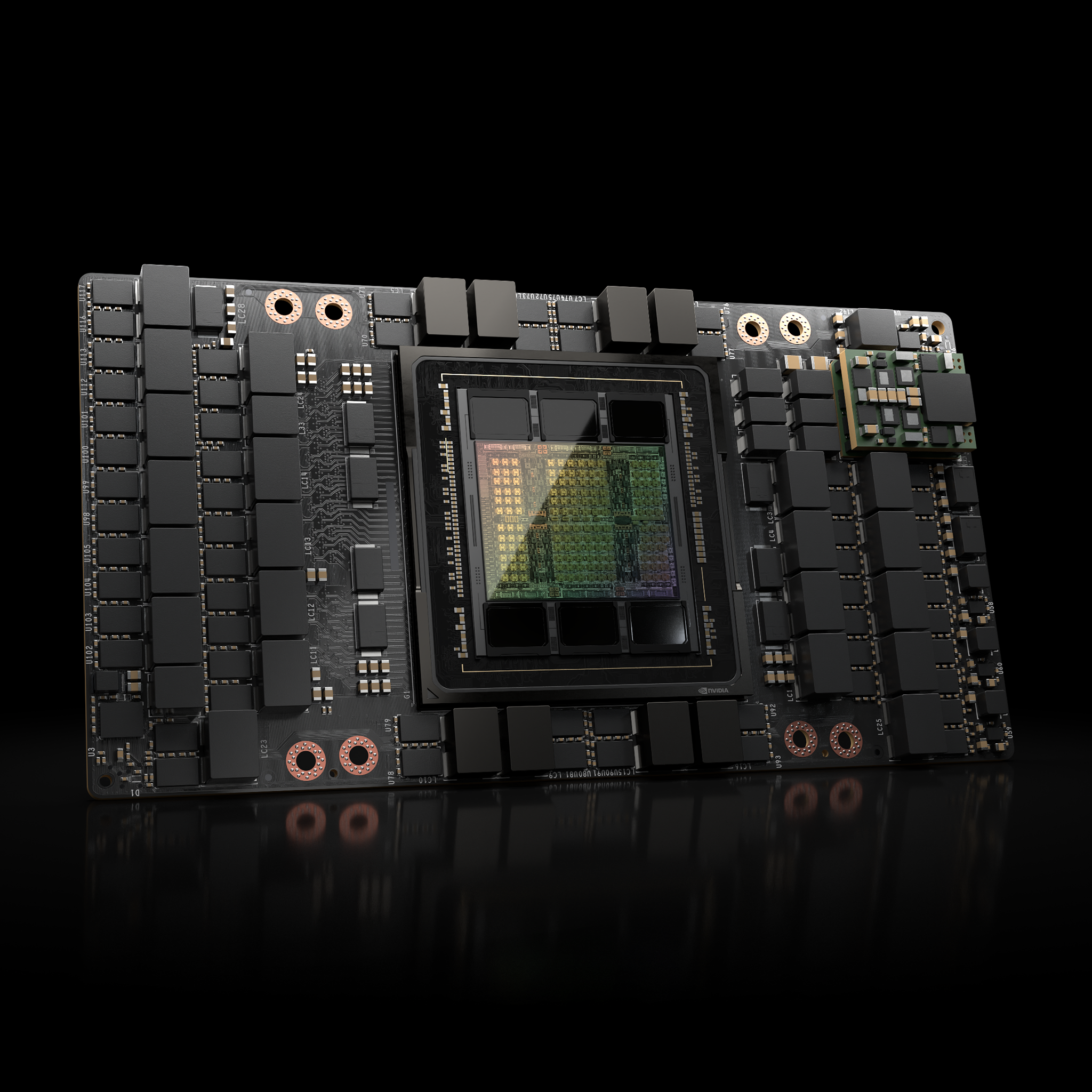

NVIDIA H200 Tensor Core GPUs

With almost double the memory capacity of the NVIDIA H100 Tensor Core GPU, plus advanced performance capabilities, the H200 is a game changer. Our AI platform, Taiga Cloud, is one of the first in Europe to offer instant access to this revolutionary hardware, which offers 141 gigabytes of HBM3e memory at 4.8 terabytes per second and 4 petaFLOPS of FP8 performance. Get ready to supercharge your AI and HPC workloads with up to 2x the LLM inference performance, 110x faster time to results and a 50% reduction in LLM energy use and TCO.

Top 3 H200 Tensor Core GPUs use cases

Market simulations, risk assessment, and fraud detection.

Digital twin simulations for factories and logistics.

Processing larger datasets for drug discovery and medicine

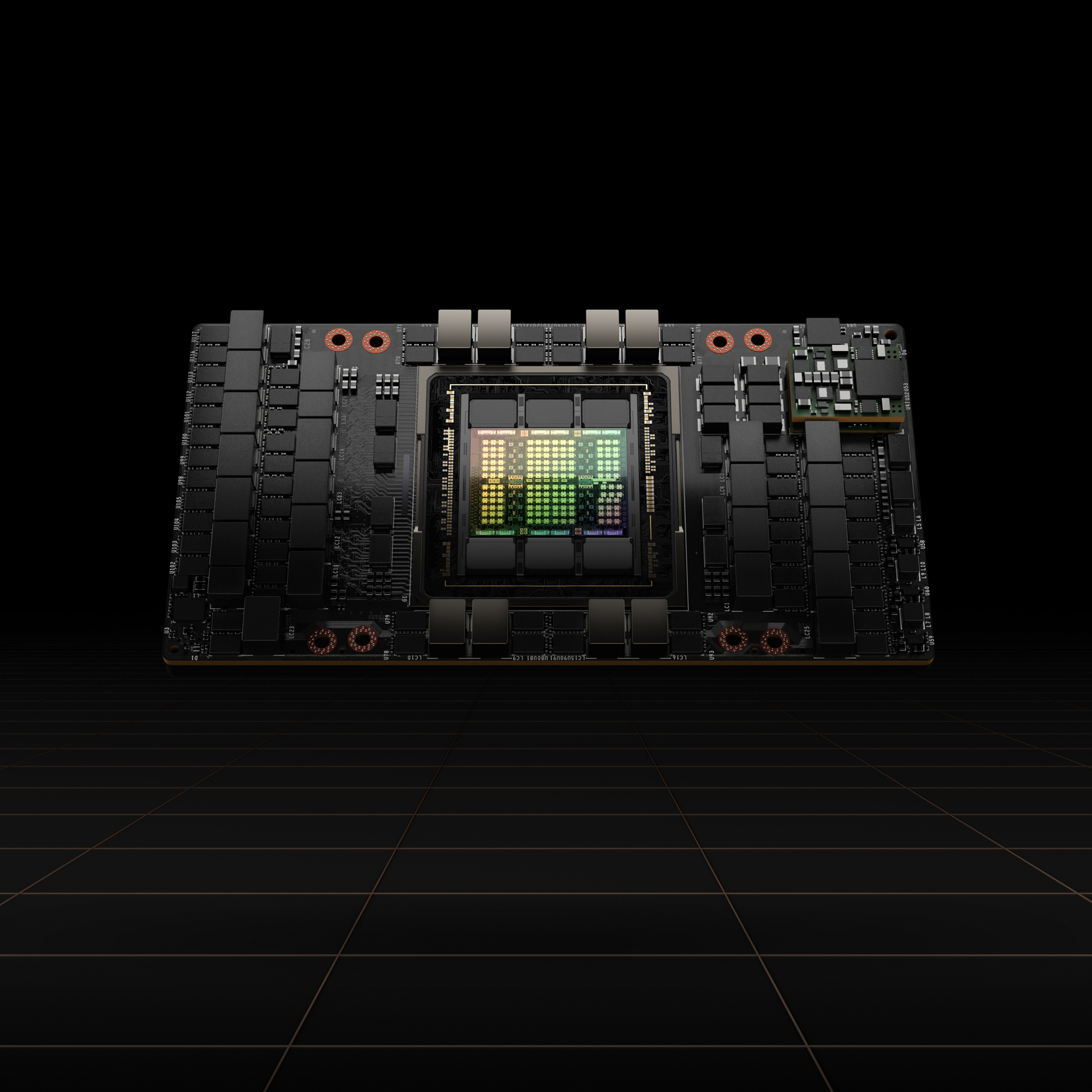

NVIDIA H100 Tensor Core GPUs

Choose the NVIDIA H100 for enterprise AI – up to 9x faster AI training on the largest models. Configured into pods of 512 GPUs, connected into islands of four pods each (2,048 GPUs) using NVIDIA BlueField DPUs and the NVIDIA Quantum-2 InfiniBand platform, we offer efficient and quick means of training LLMS. This configuration offers businesses AI solutions in a much shorter timeframe. These H100 Tensor Core GPU islands will be spread across our European, clean-energy data center estate, providing additional resilience and redundancy.

Top 3 H100 Tensor Core GPUs use cases

Industry leading conversational AI and deep learning applications

AI-aided design for the manufacturing and automotive industries

Advanced medical research and scientific discoveries

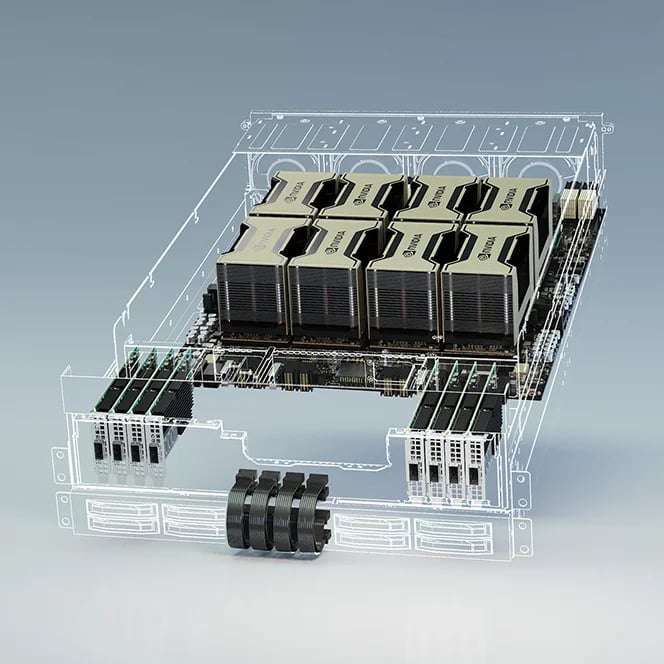

NVIDIA A100 Cloud GPUs

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration in AI and data analytics, and HPC at any scale. The current generation of A100 GPUs delivers up to 20x the performance of the previous generation.

Top 3 A100 use cases

Deep learning training

Deep learning inferencing

High performance data analytics

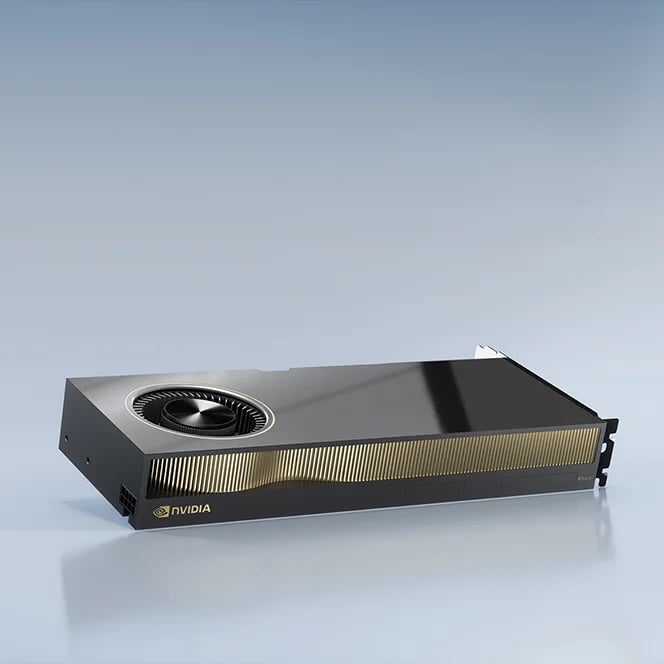

NVIDIA RTX™ A6000 GPUs

The NVIDIA RTX™ A6000 GPUs provide the speed and performance to enable engineers to develop innovative products, help architects design cutting-edge buildings, and helps scientists make breakthrough discoveries.

Top 3 NVIDIA RTX™ A6000 use cases

Graphics and simulation workflows

Photorealistic rendering, CAD and CAE

AI model training and data science

Operated in Europe. Running on carbon-free energy.

Hosted in Europe to help achieve sovereignty and compliance standards

Non-blocking network with DE-CIX access and low latency (sub 10Ms)

PUE performance as low as 1.2

H100 InfiniBand pods of 512 GPUs and islands of 2,048 GPUs and more

NVIDIA GPUs alongside CPU and RAM resources – dedicated to your workload

Access via API or Taiga self-service portal

Ready to get started?

Our GPU cloud services are built to power your next innovation. Get in touch to learn more about how we work with our partners to accelerate the world's best ideas.