Register today for NVIDIA H100 Tensor Core GPUs

Taiga Cloud is the first European AI Cloud service provider to offer instant access to a clean, secure and compliant NVIDIA H100 Tensor Core GPU network available now.

Welcome to Taiga!

How can we help you today?

Fast, secure, and revolutionary

NVIDIA H100 Tensor Core GPUs

Multiple GPUs are essential for large data sets, complex simulations, AI and HPC workflows: the ninth-generation data center NVIDIA H100 Tensor Core GPUs are designed to deliver an order-of-magnitude performance leap for large-scale AI and HPC workloads over the prior-generation NVIDIA A100 GPUs.

Benefit from:

- Faster matrix computations than ever before on an even broader array of AI and HPC workloads

- Secure Multi-Instance GPU (MIG) partitions the GPU into isolated, right-size instances to maximize quality of service (QoS) for smaller tasks

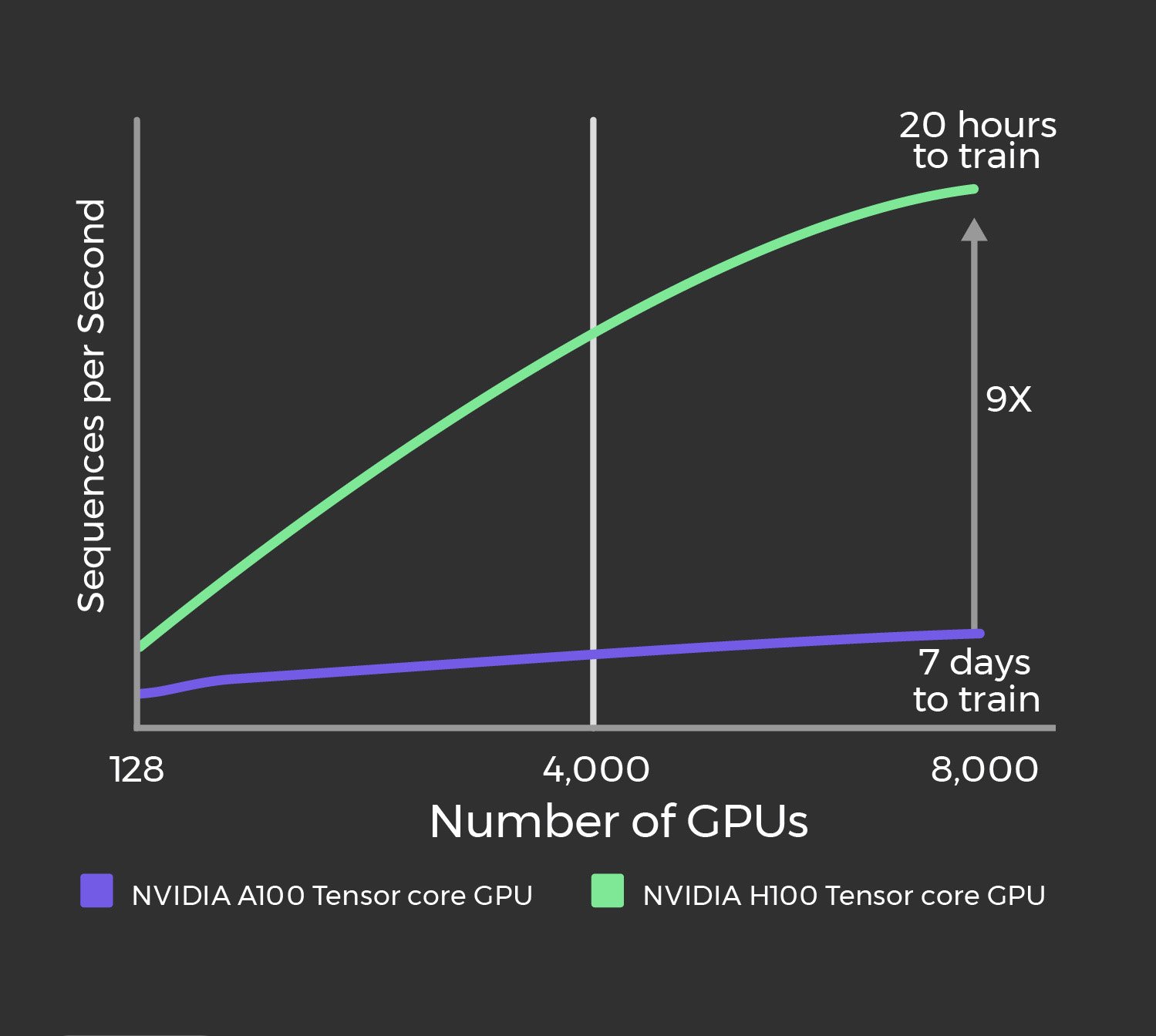

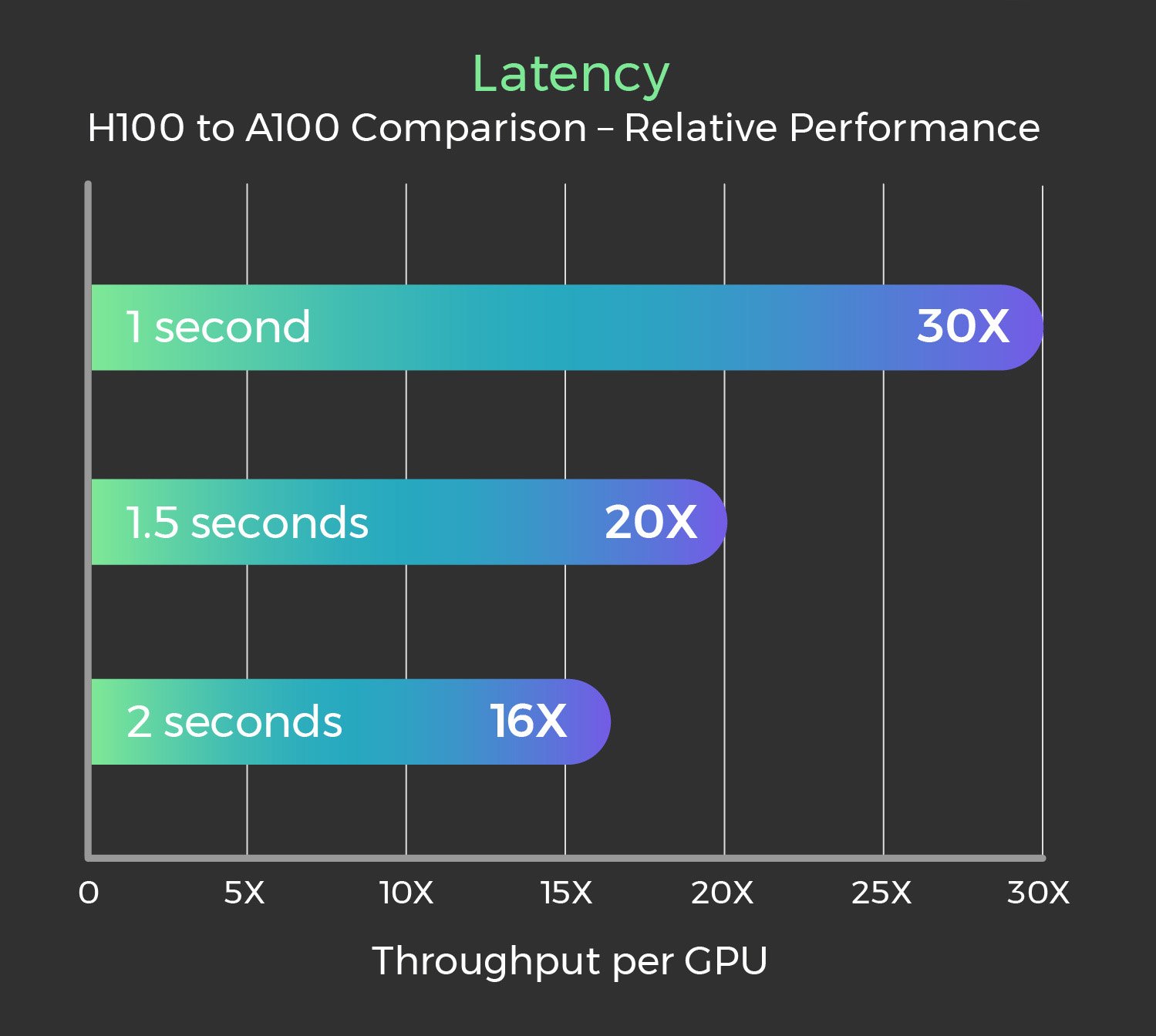

- Up to 9X faster AI training and up to 30X faster AI inference compared to the prior GPU generation.

For more information about the NVIDIA H100 Tensor Core GPUs:

Up to 9X Higher AI Training on Largest Models

Mixture of Experts (395 Billion Parameters)

Projected performance subject to change. Training Mixture of Experts (MoE) Transformer Switch-XXL variant with 395B parameters on 1T token dataset | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB

Up to 30X Higher AI Inference Performance on Largest Models

Megatron Chatbot Inference (530 Billion Parameters)

Projected performance subject to change. Inference on Megatron 530B parameter model chatbot for input sequence length=128, output sequence length=20 | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB

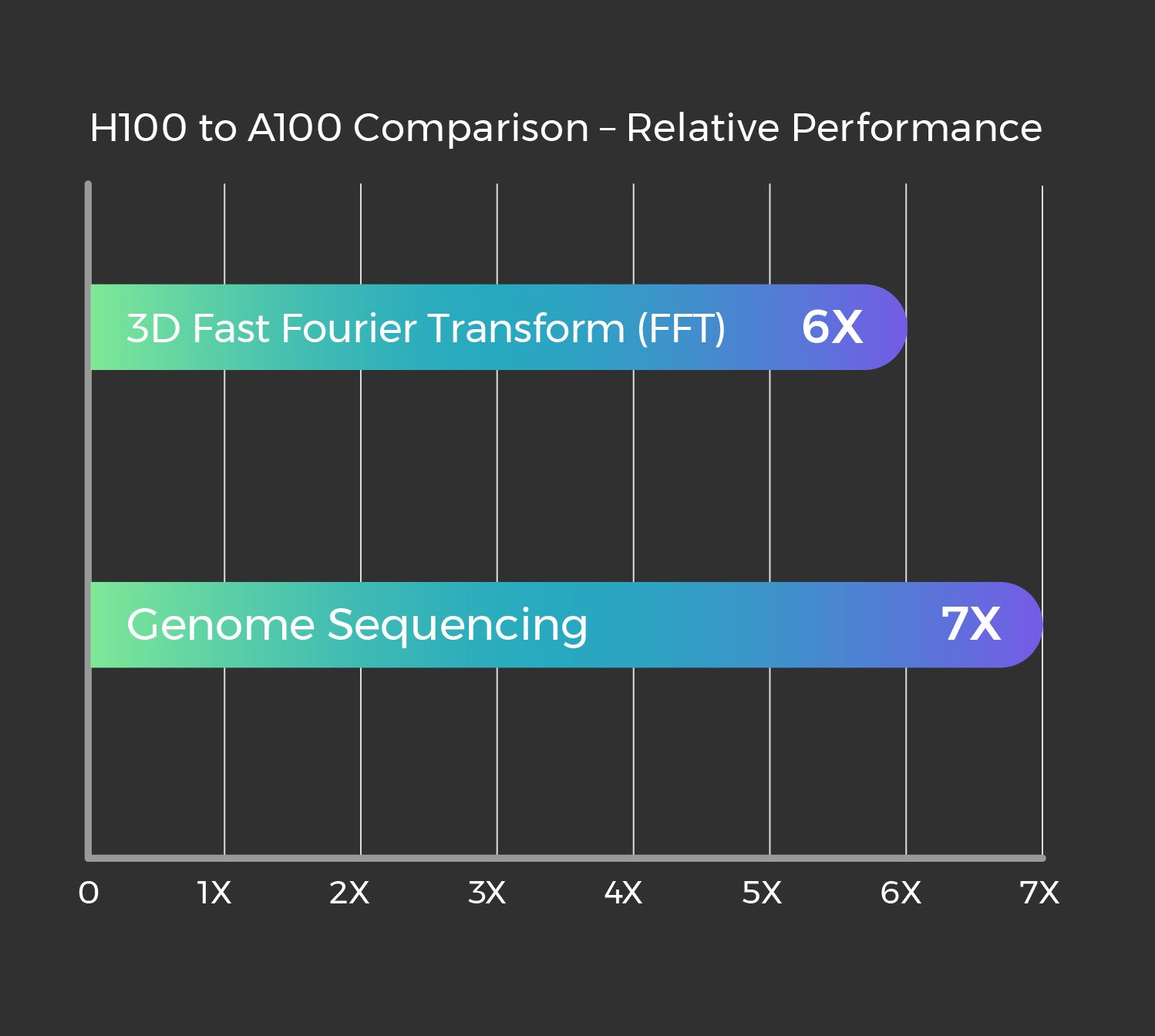

Up to 7X Higher Performance for HPC Applications

Projected performance subject to change. 3D FFT (4K^3) throughput | A100 cluster: HDR IB network | H100 cluster: NVLink Switch System, NDR IB | Genome Sequencing (Smith-Waterman) | 1 A100 | 1 H100

NVIDIA H100 Tensor Core GPU benefits

Operated by Taiga Cloud in Europe.

Hosted in Europe

Achieve sovereignty and compliance standards.

Non-blocking network

With DE-CIX access and low latency (sub 10Ms).

PUE performance between 1.15 and 1.06

Customers only pay for the energy they consume.

InfiniBand pods of 512 GPUs

These pods are connected into islands of four pods each (2,048 GPUs) using NVIDIA BlueField DPUs and the NVIDIA Quantum-2 InfiniBand platform. This configuration offers efficient and quick means of training LLMs, and offering businesses AI solutions in a much shorter timeframe.

Without overbooking

NVIDIA GPUs as well as CPU and RAM resources.

H100 Tensor Core GPUs - Top use cases and industries

Higher education and research

From your own AI, ML, or Big Data project, to rendering in architecture or media, our powerful cloud IaaS stack frees your IT from having to set up and maintain a complex infrastructure for AI or other HPC tasks, while protecting your budget from high acquisition expenses and hidden operational costs.

AI aided design for the manufacturing and automotive industries

The H100 Tensor Core GPUs deliver up to 7x higher performance rates and securely accelerate all workloads, from enterprise to exascale. This leads to various AI breakthroughs within different areas such as Engineering and Production. There are also exciting use cases in the fields of object detection or image segmentation, design and visualization, which benefit from real-time data interactivity.

Health care and life science

The H100 Tensor Core GPUs revolutionize advanced medical and scientific research and discoveries, such as weather forecasts or scientific simulations, allowing for more rapid developments within the fields of drug discovery, genomics and computational biology, through the GPUs' increased stability for AI or other HPC workloads.

Stay ahead in AI innovation

Pre-register for H200 GPUs to future-proof your projects.