Ready to supercharge your AI and HPC workloads?

Northern Data Group’s AI Cloud Service Provider, Taiga Cloud, is the first European AI cloud service provider to offer instant access to NVIDIA H200 Tensor Core GPUs.

Welcome to Taiga!

How can we help you today?

Revolutionary hardware for next-generation innovation

With almost double the memory capacity of the NVIDIA H100 Tensor Core GPU, plus advanced performance capabilities, the H200 is a game changer.

• 141 gigabytes of HBM3e memory at 4.8 terabytes per second

• 4.8 terabytes of memory bandwidth, 1.4x more than the H100

• 4 petaFLOPS of FP8 performance

For more information about the NVIDIA H200 Tensor Core GPUs:

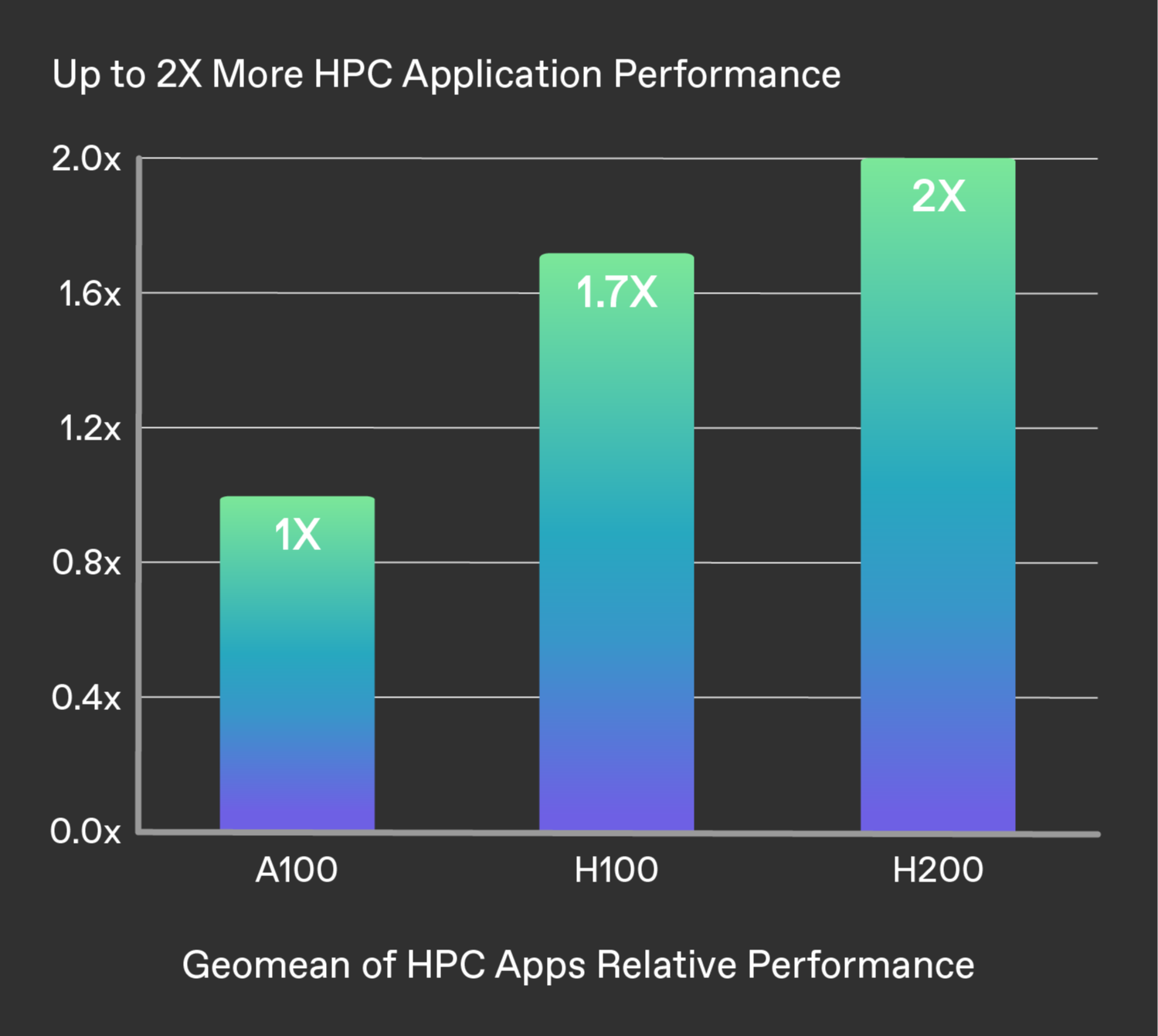

Up to 2x the LLM inference performance

AI is always changing. Businesses rely on large language models, such as Llama2 70B, to address a wide range of inference needs. When deployed at scale for a massive user base, an AI inference accelerator must deliver the highest throughput at the lowest TCO.

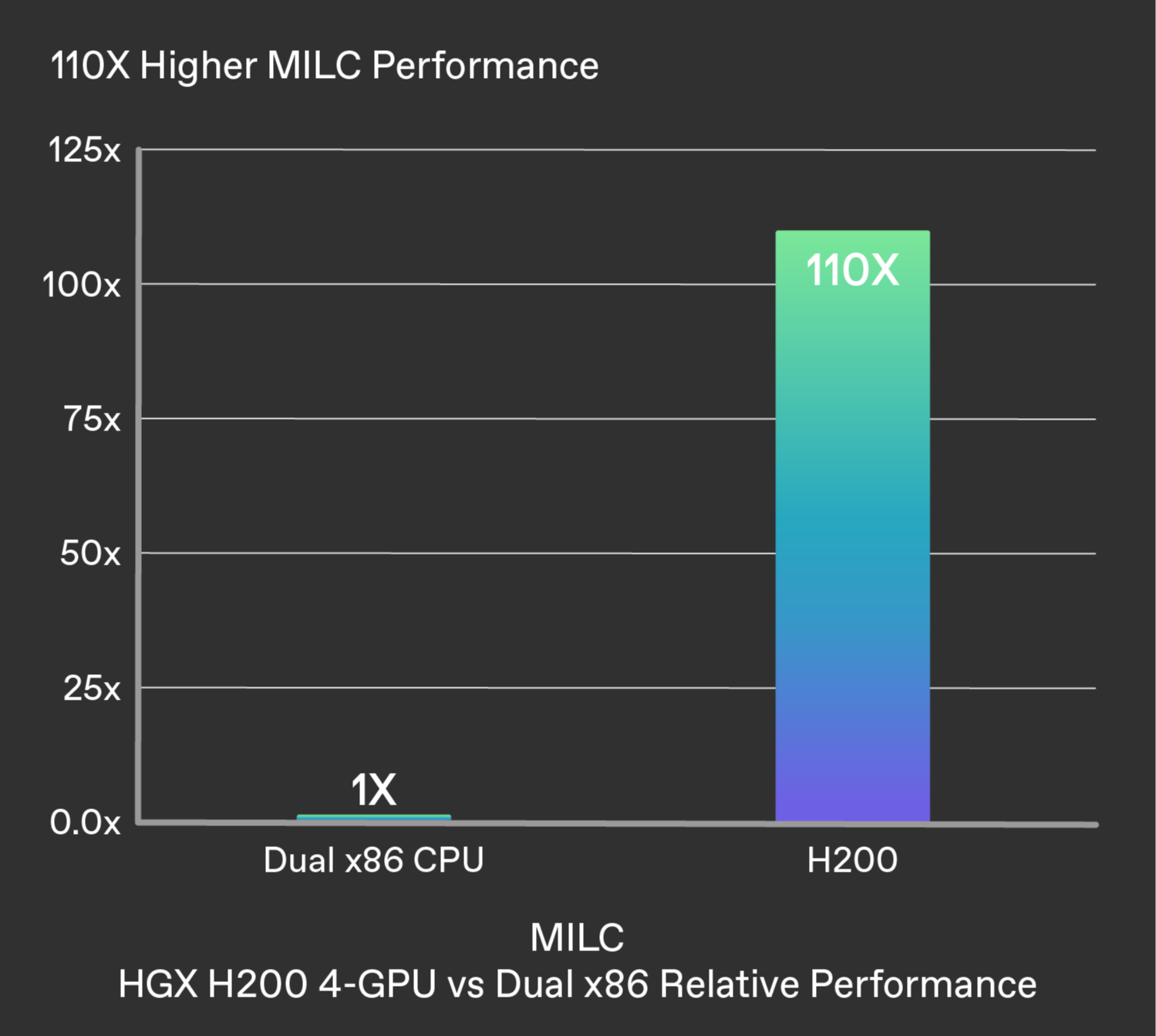

110x faster time to results

Memory bandwidth enables faster data transfer and reduces complex processing bottlenecks. It's crucial for HPC applications such as simulations, scientific research and AI. The H200’s higher bandwidth ensures that data can be efficiently accessed and manipulated.

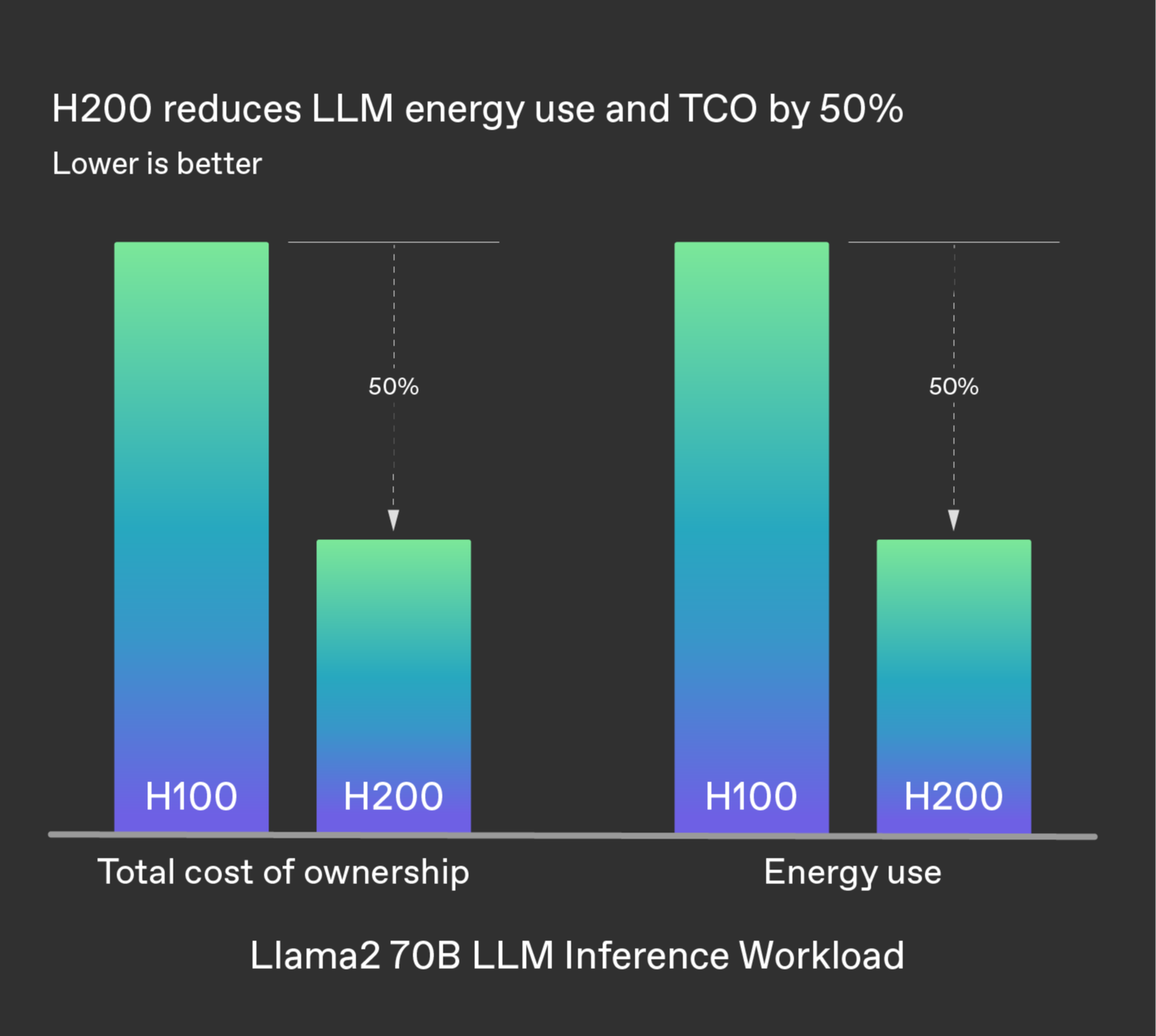

50% reduction in LLM energy use and TCO

The H200 offers unmatched performance within the same power profile as the H100, enabling energy efficiency and TCO to reach new heights. This creates an economic edge for AI and scientific communities.