Text Goes Here

Empowering AI pioneers on every step of their journey

We offer solutions designed for every stage of your AI journey, from rapid prototyping to enterprise-scale deployment across different geographies.

Taiga AI Cloud

Access tailored AI compute, on demand, immediately

Self-service, automated platform offering AI compute on demand. This model provides immediate, flexible access to state-of-the-art infrastructure on a transparent pay-as-you-go (PAYG) basis with per-minute billing.

Learn More

Developer Platform

Build, develop and deploy at pace

A unified environment providing access to NVIDIA AI Enterprise (NVAIE) and essential tools for building, deploying, and scaling AI-powered applications on cloud infrastructure.

Learn More

Intelligent Grid

Scale your AI application

Distributed AI infrastructure that connects edge devices to centralized compute resources, enabling real-time data processing, model inference, and orchestration across the network using NVIDIA reference architecture guidelines.

Learn MoreTaiga AI Cloud

Access the latest AI technology instantly

Self-service, automated platform offering AI compute on demand. This model provides immediate, flexible access to state-of-the-art infrastructure on a transparent pay-as-you-go (PAYG) basis with per-minute billing.

Multi-Tenant Multi-Region Self-Registered Platform

Managed Kubernetes Support

Full observability, autoscaling, Terraform support

Instant Availability: quickly spin up resources as needed

Most Popular On Demand AI Cloud Compute Packages

$2.80

/ hourRun AI workloads instantly with

1× NVIDIA H100 SXM GPU on demand — no commitments, full performance.

Includes:

24 vCPUs

208 GB RAM

2.9 TB local NVMe

$11.20

/ hourPurpose-built for deep learning & LLM training — full performance, instant scale.

Includes:

108 vCPUs

976 GB RAM

11.5 TB local NVMe

$22.40

/ hourRun large-scale LLMs, training or inference with 8× NVIDIA H100 SXM GPUs — full NVLink bandwidth, zero friction.

Includes:

220 vCPUs

2,000 GB RAM

23.0 TB local NVMe

Developer Platform

Build with the best development tools and hardware at your fingertips

A unified environment providing access to NVIDIA AI Enterprise (NVAIE) and essential tools for building, deploying, and scaling AI-powered applications on cloud infrastructure.

Fast start with pre-integrated NVIDIA ecosystem

Developer-friendly experience from prototype to production

Scalable and secure by design, with enterprise-ready support

Accelerate AI development with pre-built models and APIs for faster deployment and quicker go-to-market

DELETE (Doublicated Content) "Build" - with our Developer Platform

(including NIMs, NeMo etc.)

Launch-ready AI infrastructure from

Taiga Cloud

Access to NVIDIA AI Enterprise licenses in Europe

Intelligent Grid

Scale your AI Applications without limits

A distributed AI infrastructure connecting edge devices to centralized compute resources, enabling real-time data processing, model inference, and orchestration across the network using NVIDIA reference architecture guidelines.

Enables low-latency AI at the edge, with central coordination

Optimizes performance and resource usage across the network

Accelerates time to insight with an integrated, adaptable stack

Sovereignty: data sovereignty, data residency, EU Jurisdiction, EU entities, European cloud fabric, identity, authorization stack, ISO 27001, GDPR

Cutting-Edge Infrastructure

High-performance bare-metal H100/H200 clusters, purpose-built for large-scale AI training and inference — deployed in fully compliant EU data centers. Built on NVIDIA’s Cloud Partner Reference Architecture, each cluster delivers uncompromised performance, full data sovereignty, and predictable, fixed pricing for enterprise-scale workloads.

Section 1 Slider

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

We ‘Believe’ in your breakthrough ideas...

Your breakthrough ideas need more than just compute — they need belief, backing, and the right environment to grow. With the AI Accelerator, we help early-stage AI pioneers access GPUs, expert mentoring, and direct collaboration with our Partners NVIDIA. With AI Accelerator we aim Powering the world’s best ideas.

“Build” your Solution

Text Goes Here

"Own" - Claim your space, your way Dedicated Cluster

Text Goes here

"Scale"- Expand without limits Managed Kubernetes GPU worker nodes

Text Goes Here

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

Text Goes Here

We ‘Believe’ in your breakthrough ideas...

Your breakthrough ideas need more than just compute — they need belief, backing, and the right environment to grow. With the AI Accelerator, we help early-stage AI pioneers access GPUs, expert mentoring, and direct collaboration with our Partners NVIDIA. With AI Accelerator we aim Powering the world’s best ideas.

“Build” your Solution

Text Goes Here

"Own" - Claim your space, your way Dedicated Cluster

Text Goes here

"Scale"- Expand without limits Managed Kubernetes GPU worker nodes

Text Goes Here

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

Text Goes Here

Section 2 Slider

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

Text Goes Here

We ‘Believe’ in your breakthrough ideas...

Your breakthrough ideas need more than just compute — they need belief, backing, and the right environment to grow. With the AI Accelerator, we help early-stage AI pioneers access GPUs, expert mentoring, and direct collaboration with our Partners NVIDIA. With AI Accelerator we aim Powering the world’s best ideas.

“Build” your Solution

Text Goes Here

"Own" - Claim your space, your way Dedicated Cluster

Text Goes here

"Scale"- Expand without limits Managed Kubernetes GPU worker nodes

Text Goes Here

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

Text Goes Here

We ‘Believe’ in your breakthrough ideas...

Your breakthrough ideas need more than just compute — they need belief, backing, and the right environment to grow. With the AI Accelerator, we help early-stage AI pioneers access GPUs, expert mentoring, and direct collaboration with our Partners NVIDIA. With AI Accelerator we aim Powering the world’s best ideas.

“Build” your Solution

Text Goes Here

"Own" - Claim your space, your way Dedicated Cluster

Text Goes here

"Scale"- Expand without limits Managed Kubernetes GPU worker nodes

Text Goes Here

"Deliver" - Bring your impact to the world AI Intelligent Grid Everywhere Inference

Text Goes Here

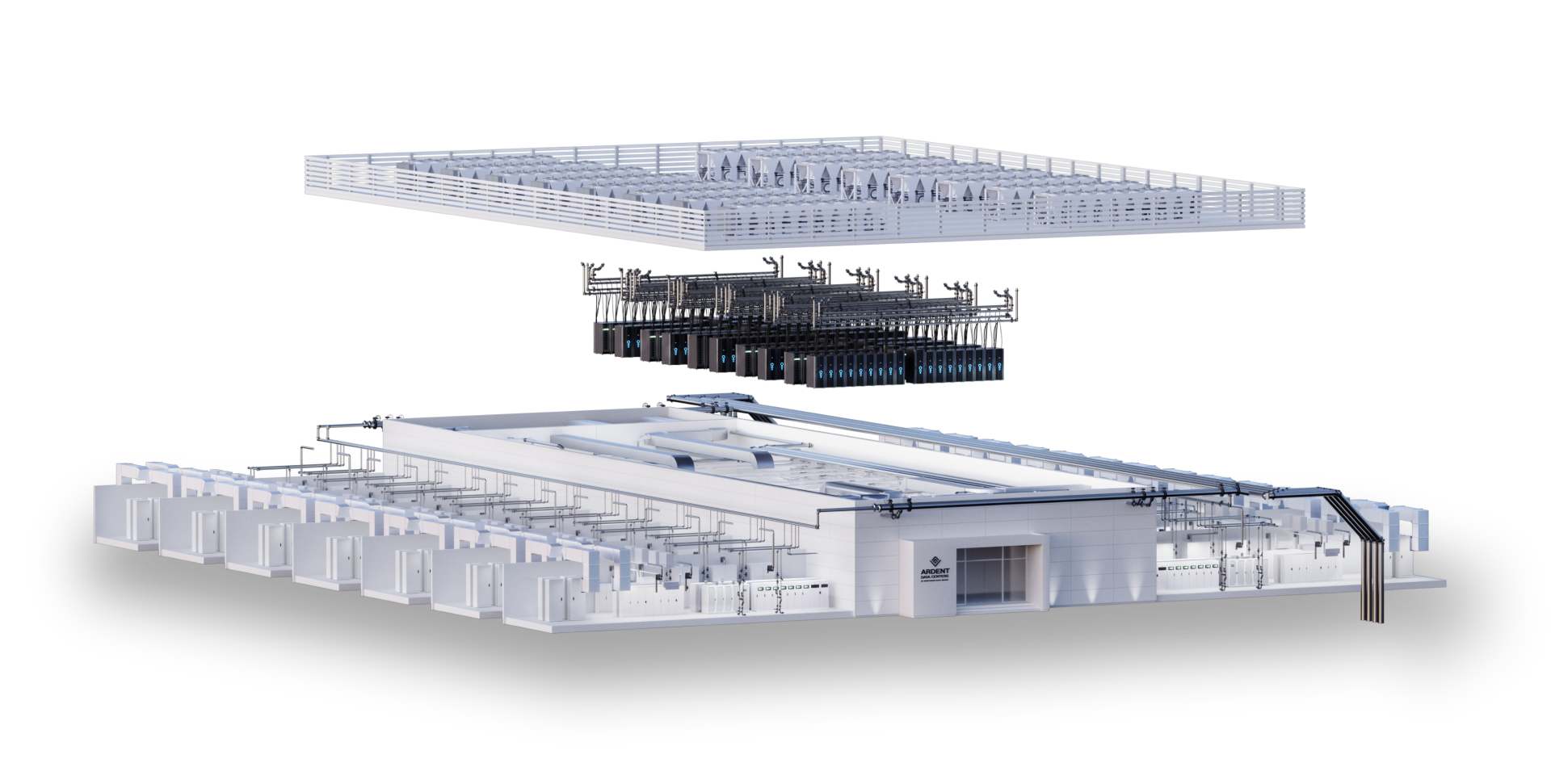

Transition to Ardent

Ardent redefines modular data center scalability, offering rapid deployment, cost efficiency, and unmatched flexibility for AI, Cloud, and HPC workloads.

0 Months

Move-in Ready

0 +MW

per ARDA120 DC

0KW

per Rack IT load

Flexible rack & section configurations

ARDAI20 offers fully customizable racks and sections, allowing you to tailor configurations to meet specific compute, storage, or hybrid workload requirements. As demands evolve, the modular design supports scalable sections that flexibly adapt layout, power delivery, and cooling capacity. This architecture ensures optimized efficiency, seamlessly integrating performance with long-term sustainability.

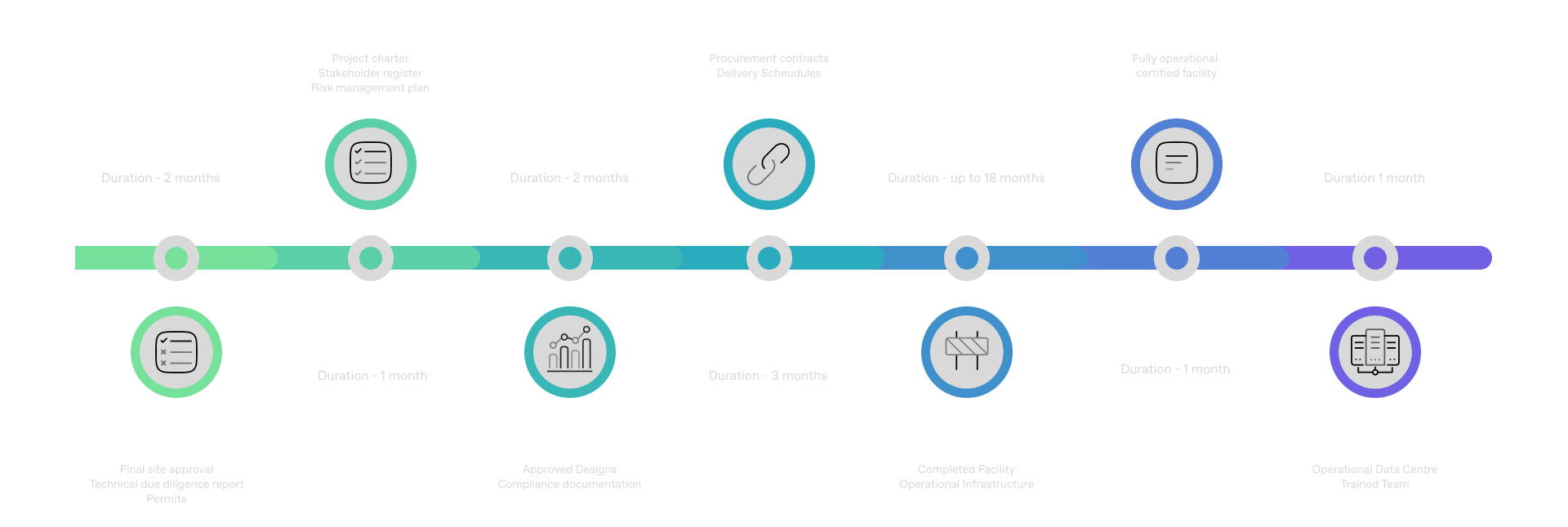

Implementation roadmap highlights

DC Design and Construction typically takes between 1.5 to 3-years to complete MDC could expedite the GTM timeline.

Colocation

Ardent's colocation services offer scalable, sustainable, and high-performance infrastructure tailored for enterprises, AI companies, and cloud providers.

Why Ardent colocation?

Our colocation services deliver energy-efficient infrastructure with advanced cooling, scalable capacity, strategic locations, and high availability — ensuring secure, cost-effective, and sustainable growth.

EU Sovereignty Built-In

Taiga Cloud is one of the only cloud providers offering EU sovereignty. At a time where data ownership and control matters more than ever, many global cloud providers are required to comply with the data policies of the countries they operate in. As part of Northern Data Group Taiga Cloud leverages a European footprint of local data centers, open-stack, and region-specific services to protect autonomy.

We are focused on data, operational, and technology sovereignty. Taiga Cloud will never store, process, encrypt or transfer data under the jurisdiction of other countries, and our customers maintain full ownership over the data within their cluster hardware.

Data Sovereignty

As a data-sovereign organization we are autonomous with our own data and ensure secure access.

Operational Sovereignty

We are independent from individual platforms when operating software and are free to decide where to run an application.

Technology Sovereignty

We verify that software functions correctly, which is only possible with full transparency over the source code, through open-source or shared-source software.

The Benefits of Taiga Cloud

Reliable

Enterprise-grade AI infrastructure with NVIDIA-certified GPUs, high-performance interconnects, and SLA-backed uptime — designed for training and production workloads.

Simplified

AI deployment stack, with managed Kubernetes, SLURM, and developer tooling that removes operational friction from training and inference workflows.

Full-lifecycle support

Full-lifecycle support: from data ingestion and curation, to pretraining, fine-tuning, testing, guardrailing, and deployment — all within a unified infrastructure and SLA backed.

Low-latency

Low-latency inference edge network reducing time-to-response for real-time AI applications.

European data sovereignty

European data sovereignty for enterprises that need to comply with GDPR and other European regulatory frameworks.

Data centers,

revolutionized:

Ardent pioneers high-performance, scalable colocation and modular data centers,

delivering secure, efficient, and rapidly deployable infrastructure for AI, edge computing,

and enterprise workloads.