Register today for NVIDIA GB200 GPUs

Reserve now your access to NVIDIA GB200 NVL72, delivering unparalleled AI performance in a secure and compliant environment.

Welcome to Taiga!

How can we help you today?

Unleashing AI at Unprecedented Speed and Scale: NVIDIA GB200 GPUs

The GB200 NVL72 redefines AI performance, delivering 30X faster inference for trillion-parameter models. With 72 Blackwell GPUs and 36 Grace CPUs, it functions as a single powerful AI engine. Designed for efficiency with liquid cooling, it seamlessly scales AI workloads for enterprise, research, and next-generation data centers.

Benefit from:

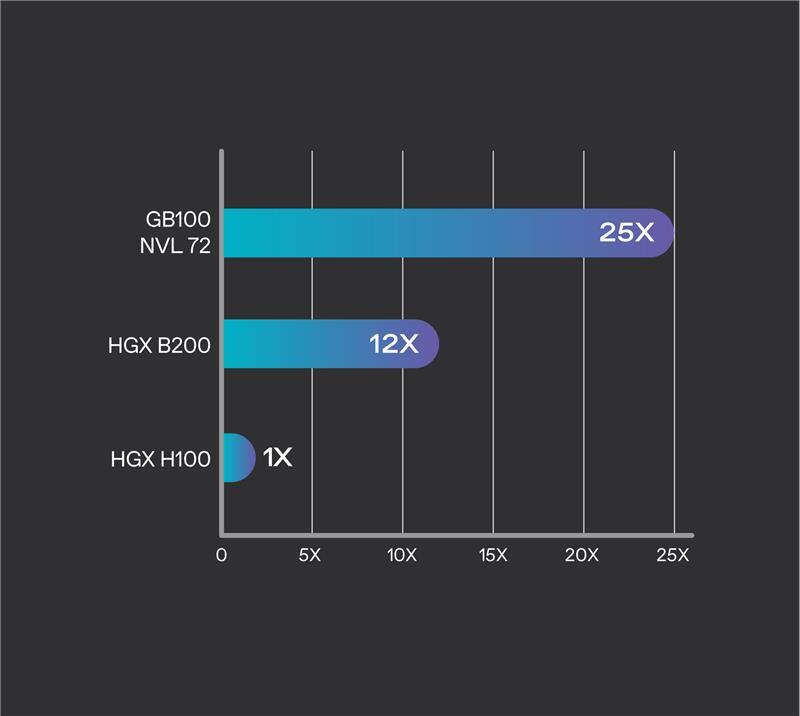

- Compared to NVIDIA H100 air-cooled infrastructure, GB200 delivers 25X more performance at the same power while reducing water consumption.

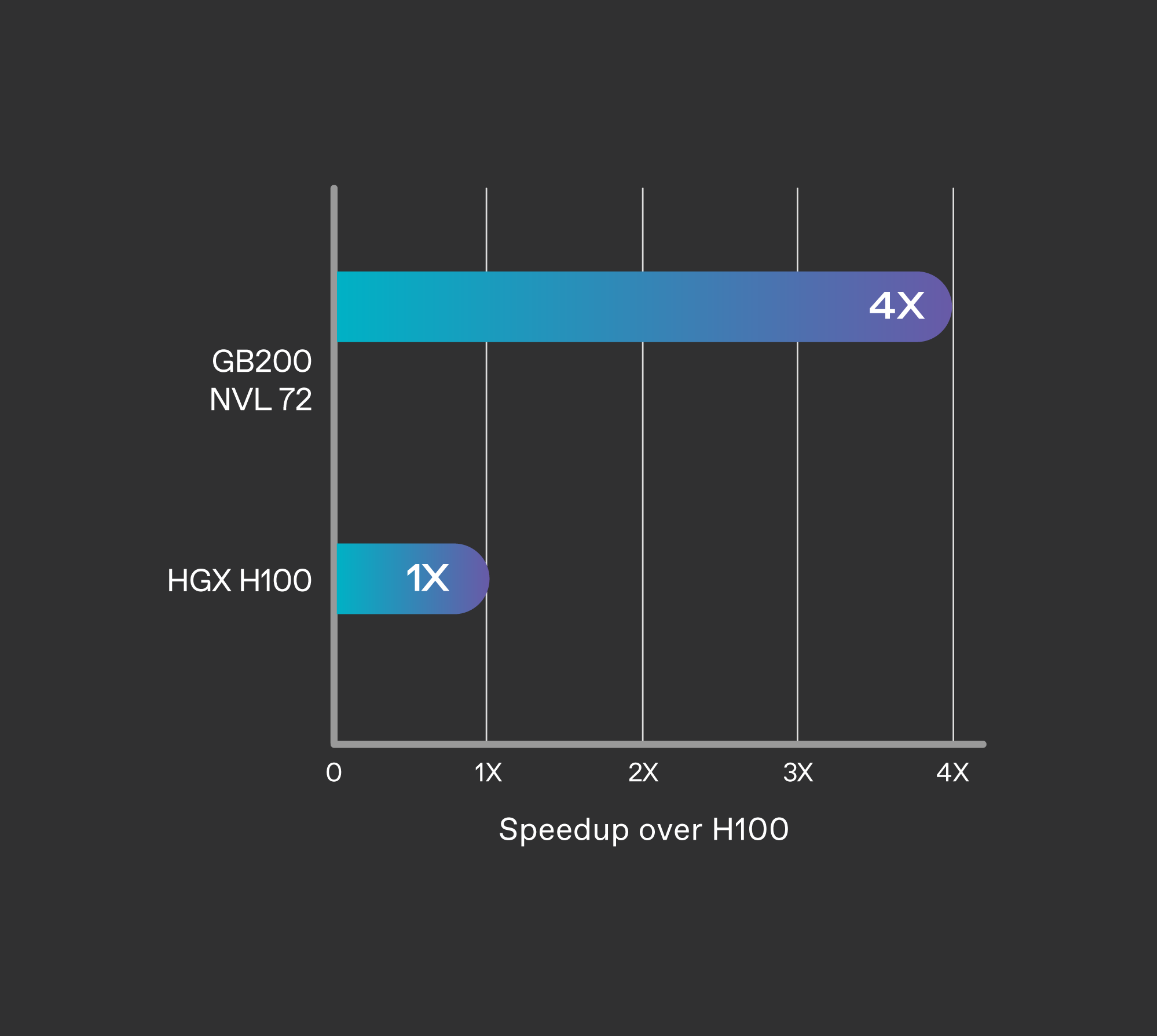

- Faster second-generation Transformer Engine featuring FP8 precision, enabling a remarkable 4X faster training for large language models at scale.

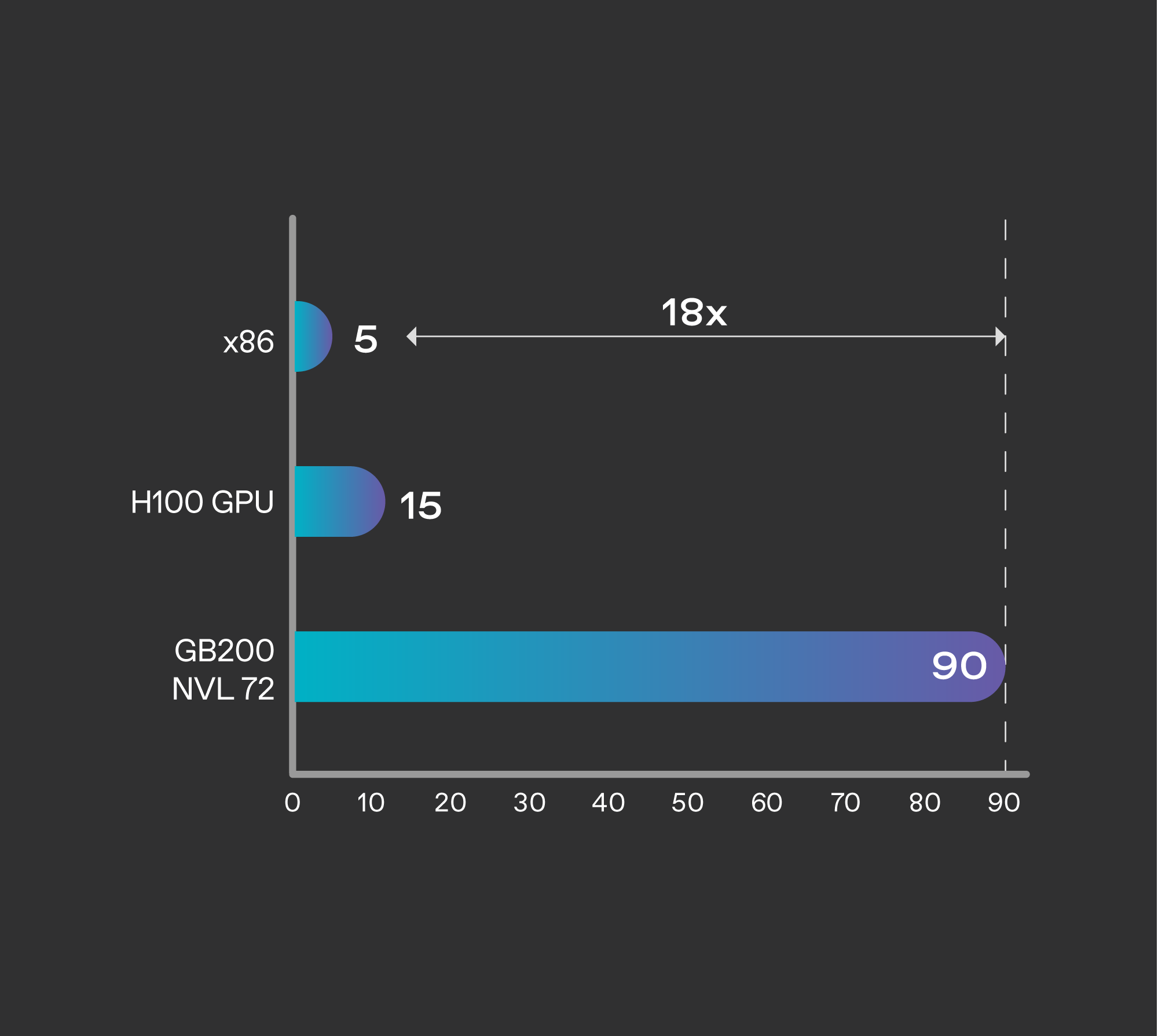

- Speed up key database queries by 18X compared to CPU and deliver a 5X better TCO.

For more information about the NVIDIA GB200 GPUs:

GB200 delivers 25X more performance at the same power while reducing water consumption.

Liquid-cooled GB200 NVL72 racks reduce a data center’s carbon footprint and energy consumption. Liquid cooling increases compute density, reduces the amount of floor space used, and facilitates high-bandwidth, low-latency GPU communication with large NVLink domain architectures.

Remarkable 4X faster training for large language models at scale.

GB200 NVL72 includes a faster second-generation Transformer Engine featuring FP8 precision, enabling a remarkable 4X faster training for large language models at scale. This breakthrough is complemented by the fifth-generation NVLink, which provides 1.8 terabytes per second (TB/s) of GPU-to-GPU interconnect, InfiniBand networking, and NVIDIA Magnum IO™ software.

Speed up key database queries by 18X compared to CPU.

Databases play critical roles in handling, processing, and analyzing large volumes of data for enterprises. GB200 takes advantage of the high-bandwidth memory performance, NVLink-C2C, and dedicated decompression engines in the NVIDIA Blackwell architecture to speed up key database queries by 18X compared to CPU and deliver a 5X better TCO.

GB200 GPUs - Top use cases and industries

Large Language Model (LLM) Inference

The GB200 introduces cutting-edge capabilities and a second-generation transformer engine that accelerates LLM inference workloads. It delivers a 30x speedup for resource-intensive applications like the 1.8T parameter GPT-MoE compared to the previous H100 generation.

Product design and development

Physics-based simulations are still the mainstay of product design and development. From planes and trains to bridges, silicon chips, and even pharmaceuticals, testing and improving products through simulation saves billions of dollars. The Cadence SpectreX simulator is one example of a solver. SpectreX circuit simulations are projected to run 13x quicker on a GB200 Grace Blackwell Superchip—which connects Blackwell GPUs and Grace CPUs — than on a traditional CPU.

Data processing

Organizations continuously generate data at scale and rely on various compression techniques to alleviate bottlenecks and save on storage costs. To process these datasets efficiently on GPUs, the Blackwell architecture introduces a hardware decompression engine that can natively decompress compressed data at scale and speed up analytics pipelines end-to-end.

Stay ahead in AI innovation

Pre-register for H200 GPUs to future-proof your projects.